Best practices, front and center

Facing data challenges? Companies such as Meta solve their data problems before they happen by adopting data engineering best practices such as Functional Data Engineering.

Now you can benefit from the same by adopting Trel. Designed from the ground up around immutable datasets, idempotent jobs, and reproducibility, your pipelines will be more reliable and maintainable than ever.

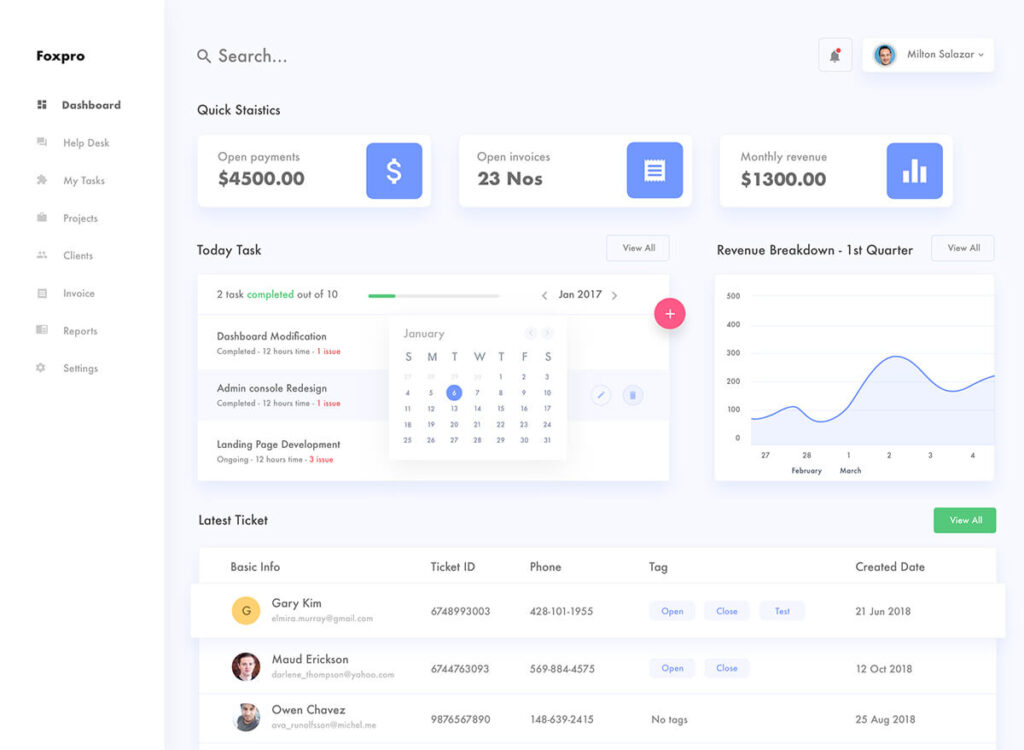

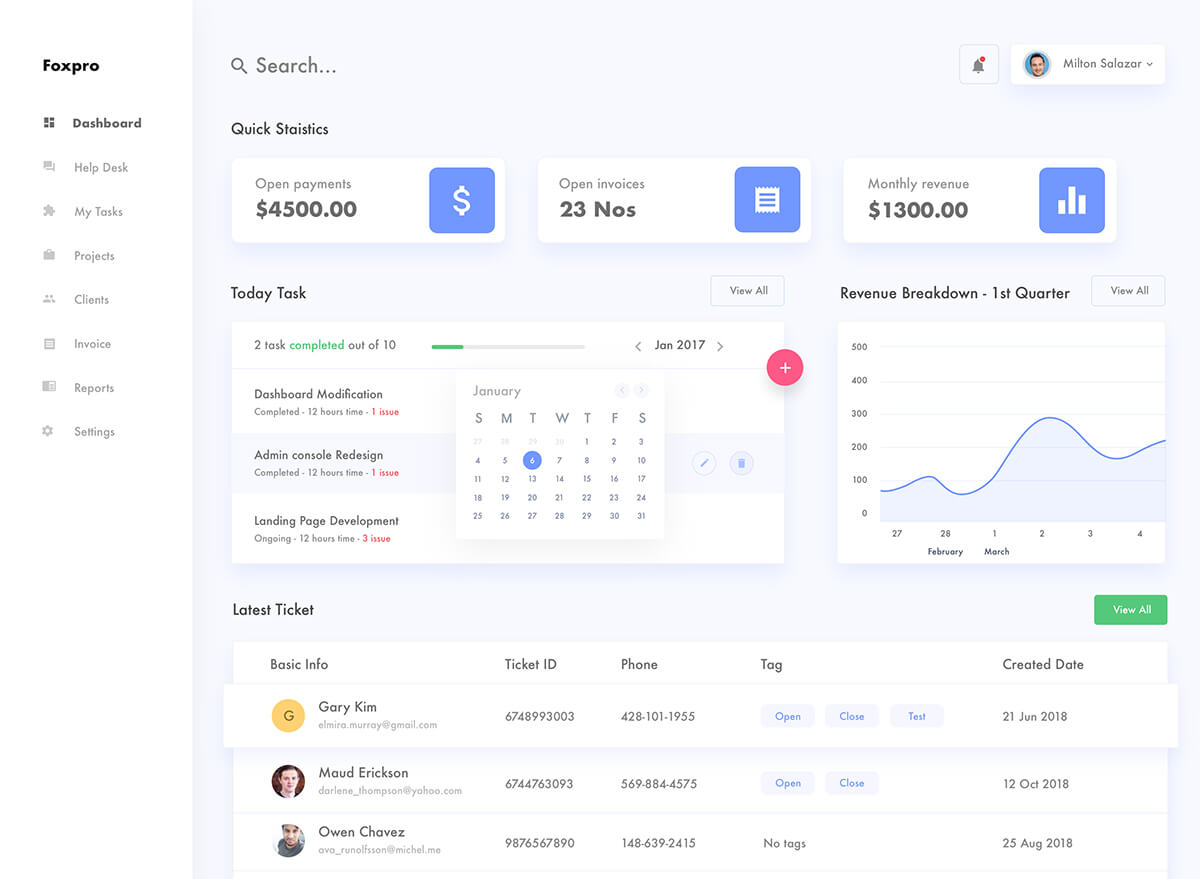

What is Trel?

Trel is a SaaS product designed to form the core of a complete enterprise data platform that strongly supports best practices such as Function Data Engineering and IBM DataOps.

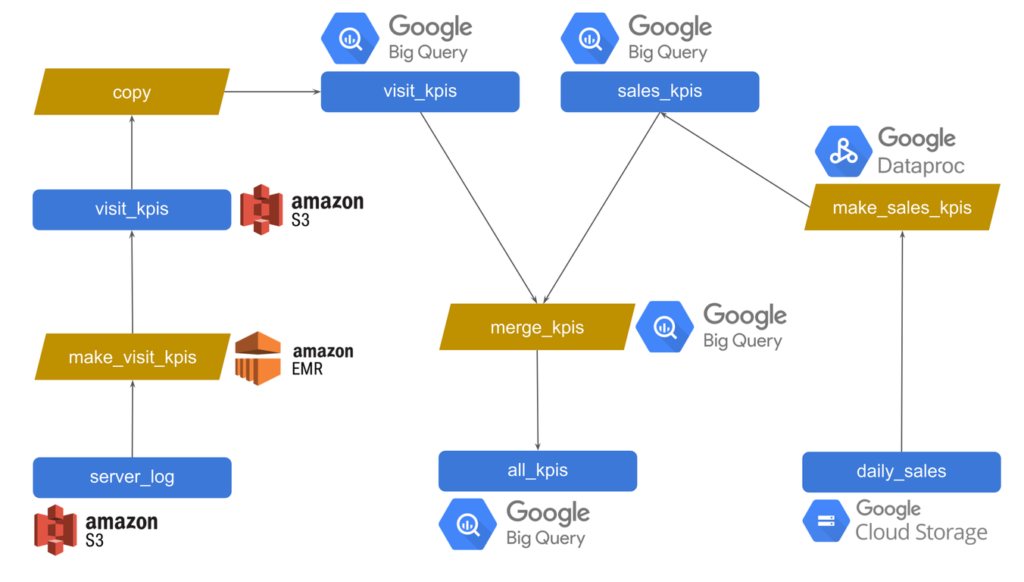

It offers a data catalog that actively organizes your data, a new job automation approach that upgrades you from DAGs, and comprehensive design guidelines.

By carefully delegating data storage and compute responsibilities to your preferred technologies, you can keep working with the same tools while benefitting from modern best practices.

Announcement

New Salesforce connectors

Load your data from Salesforce to Trel using our pre-built sensor. Your specified tables will be automatically loaded and cataloged.

After computing your results, you can use the reverse connector to push it back to Salesforce.

Check out our blog post.

Features

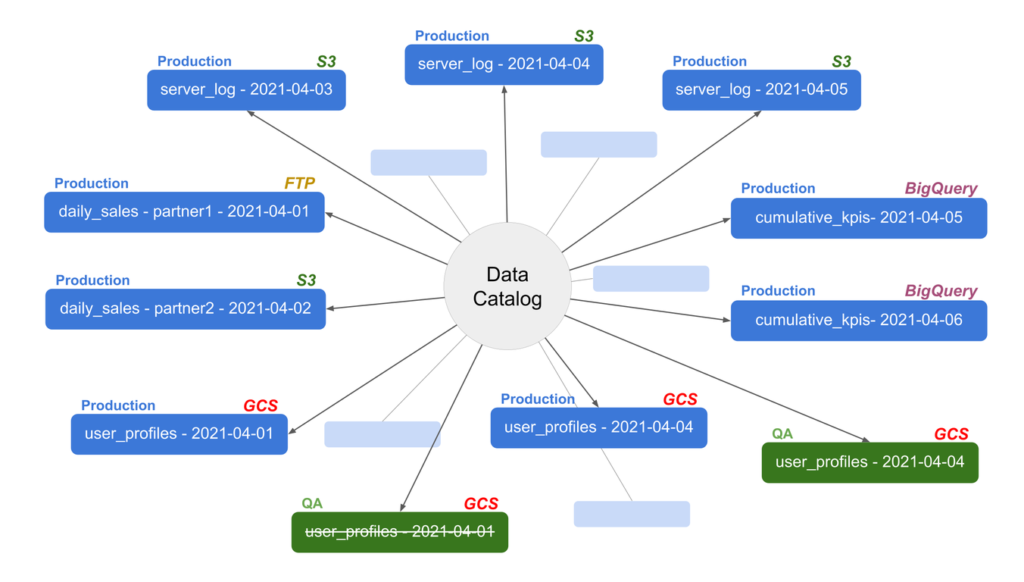

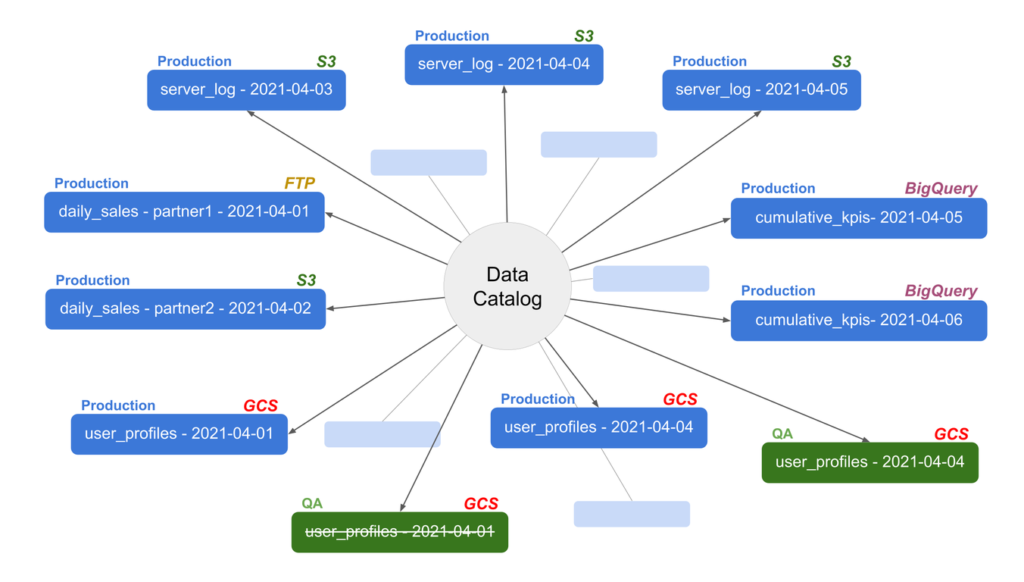

Trel’s unique data catalog takes an active role in enforcing organizational policies for data storage and security. It attaches unique standardized metadata that breaks down departmental silos and facilitates reuse.

This ACID data catalog offers a consistent view of valid and usable datasets. It powers automation using a novel mechanism called “catalog-based dependency.”

Trel catalog proactively takes responsibility for organizing your data.

By eliminating data organization from user code, Trel facilitates centralized data governance.

As a bonus, this boosts team productivity and facilitates self-serve for data scientists.

The Trel design guidelines and the Trel data platform is geared towards immutable datasets.

This allows the data catalog to do version control and time-travel on any data store, such as S3 or BigQuery.

More than just seeing historical data, Trel makes it trivial to reproduce entire historical pipelines for what-if experiments.

A centralized lifecycle management system allows you to set rules to control costs in any storage technology.

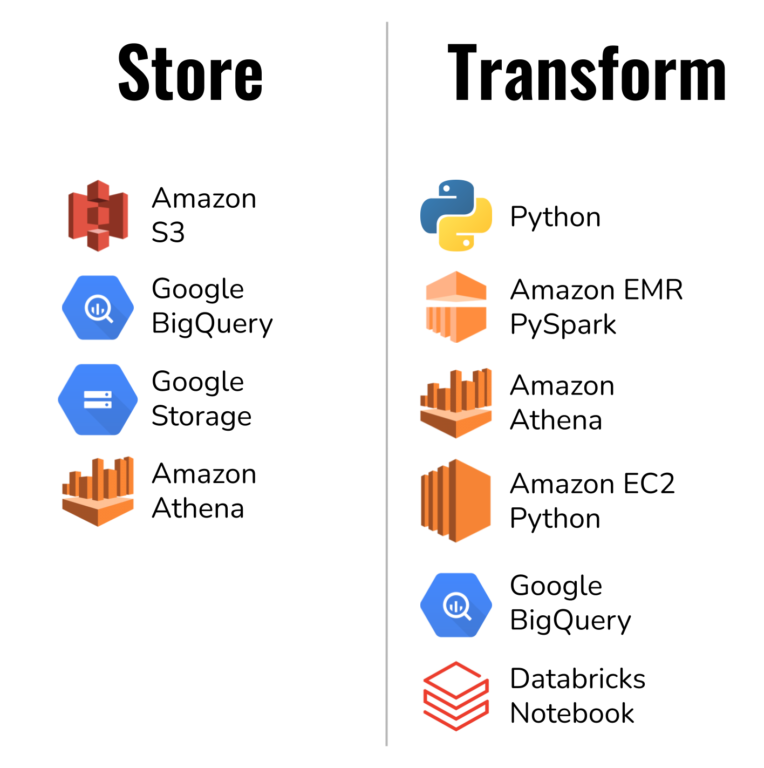

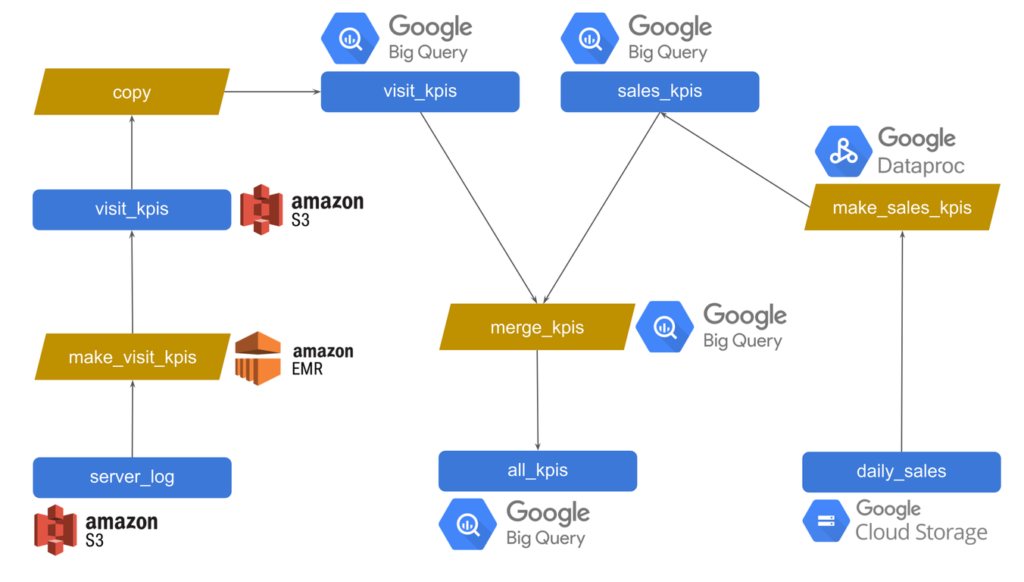

Trel allows you to choose any computational technology you prefer to run each job. There is no global restriction or even restriction between jobs that share data.

However, the compute technology has to be compatible with the storage technology.

We proactively add support for new technologies based on customer needs.

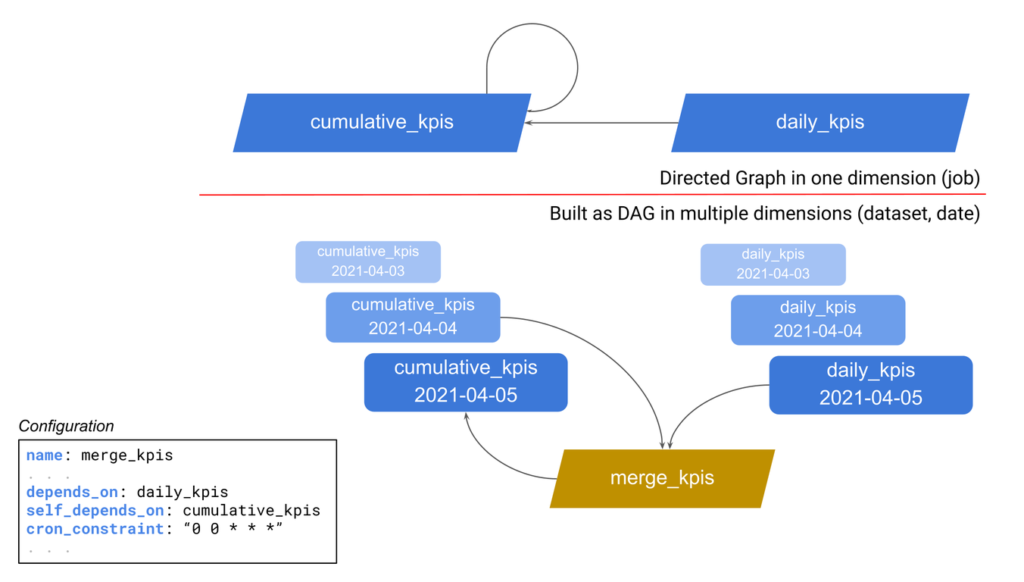

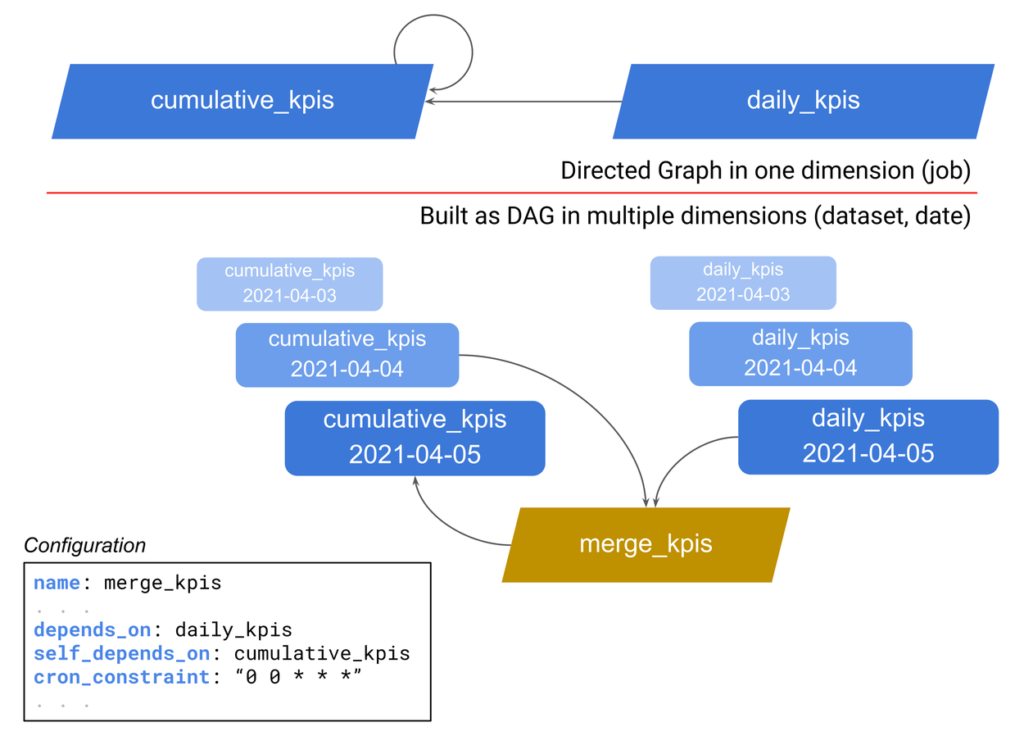

Trel’s unique” catalog-based dependency” automation mechanism works without the need for coding. This eliminates a significant source of data errors.

Furthermore, this boosts team performance. This simpler approach also makes it self-serve for data scientists.

Catalog-based dependency mechanism performs strict input and output path validations. Even in the presence of substantial delays and job failures, your jobs are guaranteed to run against the correct inputs.

Trel data catalog naturally allows you to treat all computed data as an internal product, consistent with the data mesh architecture.

Along this vein, you can easily set up SLA for any dataset and be alerted if it does not arrive on time.

supported tech

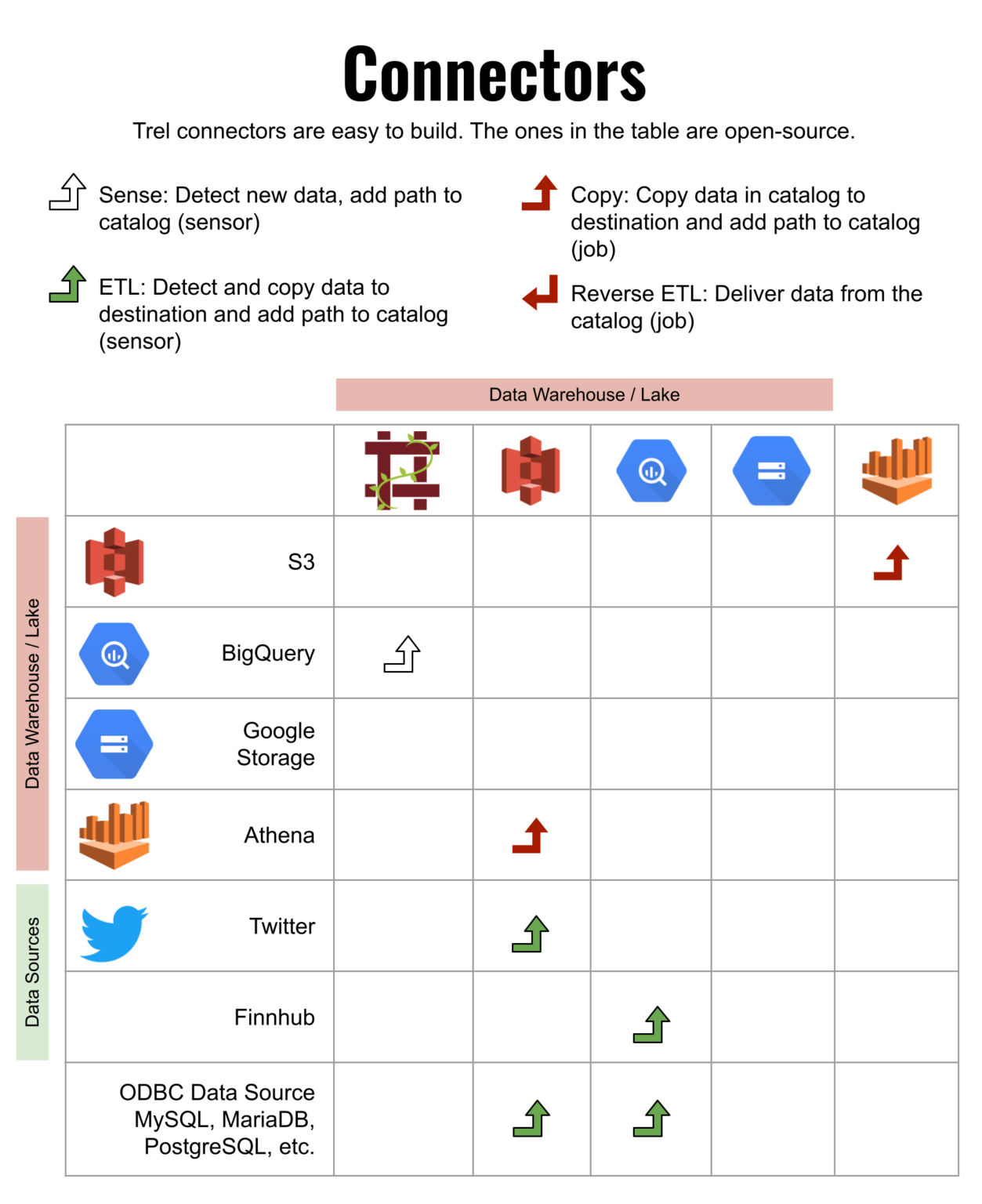

supported connectors

benefits

Conquer complexity

Decoupled jobs and code-free dependencies make it easy to scale.

Reliability from Strictness

Formal guarantees on data quality even when jobs fail.

Fix your data with a few clicks

Resolving disruptions is no longer a concern when you have a time-machine.

TREL TECHNOLOGY

Organize and catalog the complete history of your data

Our job design guidelines produces immutable data in the catalog. Our immutable data catalog captures not just your current data, but all the changes that were made, ever.

Immutable data also offers outstanding lineage tracking and debugging capabilities.

TREL TECHNOLOGY

Express complex dependencies without code

During decomposition, for scheduling jobs, we take advantage of formal scheduling patterns implemented as plugins developed over the years.

They allow us to express your job dependencies strictly, without coding or DAGs.

Furthermore, due to our innovative catalog-based dependency system, we can architect a unified data mesh of DE and DS jobs rather than disconnected DAGs.

TREL TECHNOLOGY

Storage & processing technology agnostic

Trel can fully support new storage and computational technologies by writing a plugin.

During decomposition, we will mix and match Data science and Data engineering technologies in a unified data pipeline for your requirement.

TREL WORFLOW IMPROVEMENT

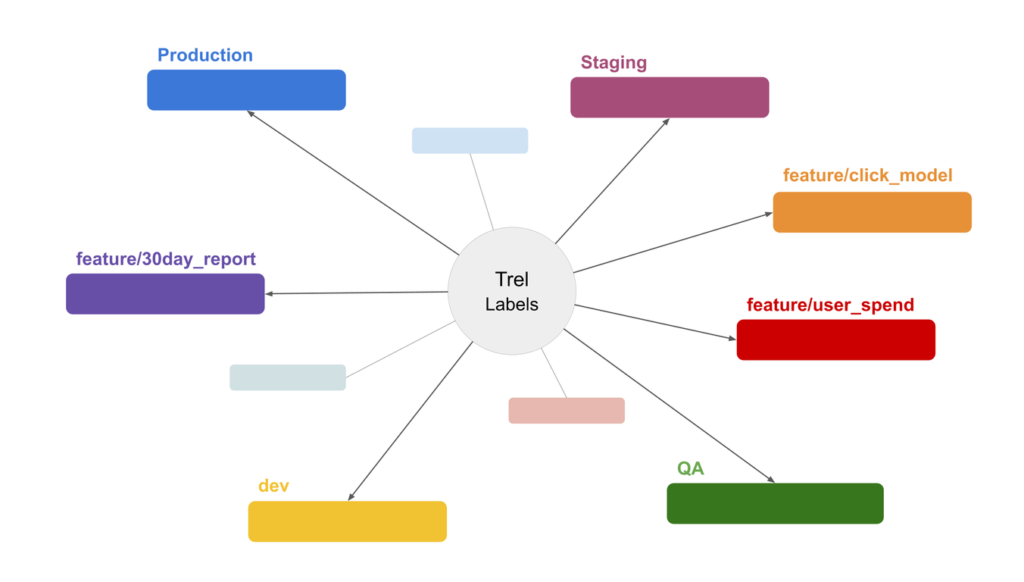

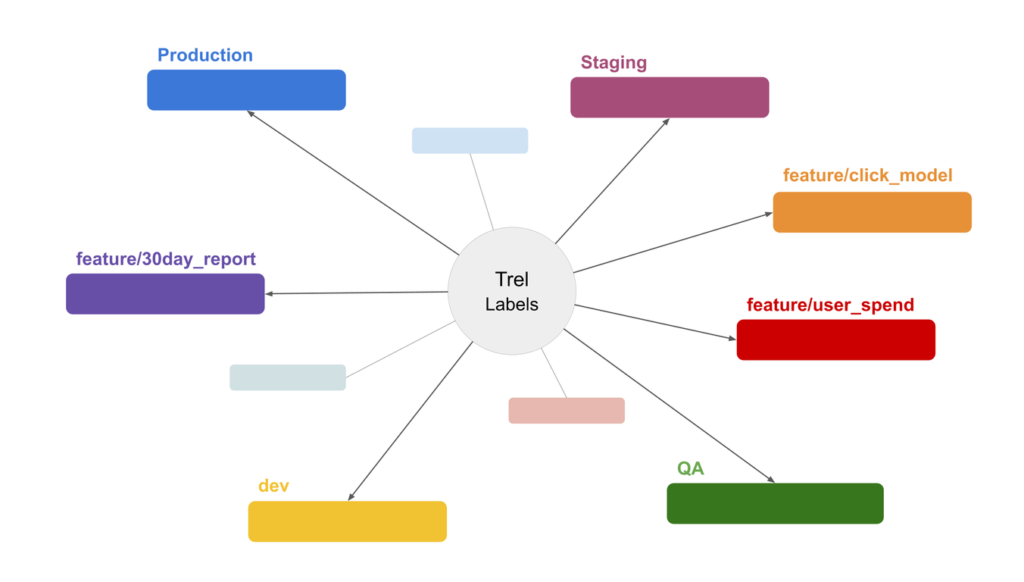

Unlimited test environments

Using Trel platform, you can safely test each feature in an environment only for itself.

During decomposition, we design your data transformation code to be execution environment independent. Thus, QA results are guaranteed to be reproducible in production.

our process

We can help you with your data strategy and job design

We can discuss your requirements and develop a data architecture that fits your technology stack, security and regulatory requirements, and data governance policies.

We can then configure our platform for this architecture and offer it to your team, ready to use for building data pipelines and transformations.

Polls and surveys

Know Our Working Process

Research Project

Find Ideas

Start Optimize

Reach Target

we’re working with

25,000+ of the world’s most successful companies with trust

Project Completed

Expert Support Team

Client Worldwide

it’s easy for marketers to brag about how great their product or service is. Writing compelling copy, shooting enticing photos, or even producing glamorous videos are all tactics

Ethan J.Cooper

Managing Partner, supercheapcar.com

it’s easy for marketers to brag about how great their product or service is. Writing compelling copy, shooting enticing photos, or even producing glamorous videos are all tactics

Jane Doe

Managing Partner, supercheapcar.com

Customer service

SaaS Implementation Mission critical, accomplished

Cumulative Data is a SaaS and support company data pipeline

Mission

Build a data pipeline framework that gets out of the data engineers’ way so that they can build the data pipeline that the company needs.

Vision

Our efficient data pipeline framework will improve the data competency of data engineers, analysts, and data scientists, making a better world through sound data-driven decisions.

Shorter

Cumulative Data is a SaaS and support company data pipeline

Trel loves complexity

Don't be forced to build a simple data pipeline. Trel makes it easy to do exactly what you want. Complex machine learning workflows? No problem.

Trel says what. You say how

It uses your own business logic to orchestrate your data lake and data pipeline. Trel does not touch your data, the reason why it is truly platform agnostic.

Trel gives power to you.

Trel will feel similar to a well configured shell. Do complex pipeline maintenance with just a few commands.

Make your data work hard

Trel shines once your data lands in your data lake. You can build complex and arbitrary data engineering and data science pipelines easily with Trel.

TREL

Vision WIP

Efficient companies rely on data to make decisions. Often a sophisticated data pipeline is required to produce actionable data. We believe companies should not have to compromise on the capabilities of their data pipeline. It should be reliable, agile, and performance. With Trel, we help our clients efficiently build and maintain a no-compromise data pipeline that moves as fast as they do.

Learn how Trel can help you. See a demo and discuss your needs with a specialist.

Request a demoInterested in learning more about Trel?

Use this form to reach out to us.

Or,